The Great Stagnation: A misleading narrative

Back in 2016 I wrote a Nintil classic, No great technological stagnation where I argued that, as far as we could see from public data, improvements in various technologies do not seem to be slowing down. If I were to rewrite it now I would note that trends in batteries and solar panels have continued, and I would add some new trends like for single cell sequencing (A whole new category that didn't exist until 2009).

There is one problem with my analysis there that I left open,

Are there less 'really innovative' innovations? [That is, breakthroughs, paradigm shifts, historically significant inventions]

Because it could be the case that each of the existing technologies was progressing just fine BUT at the same time the number of new ones (That would not just be another dot in the chart) could be going down.

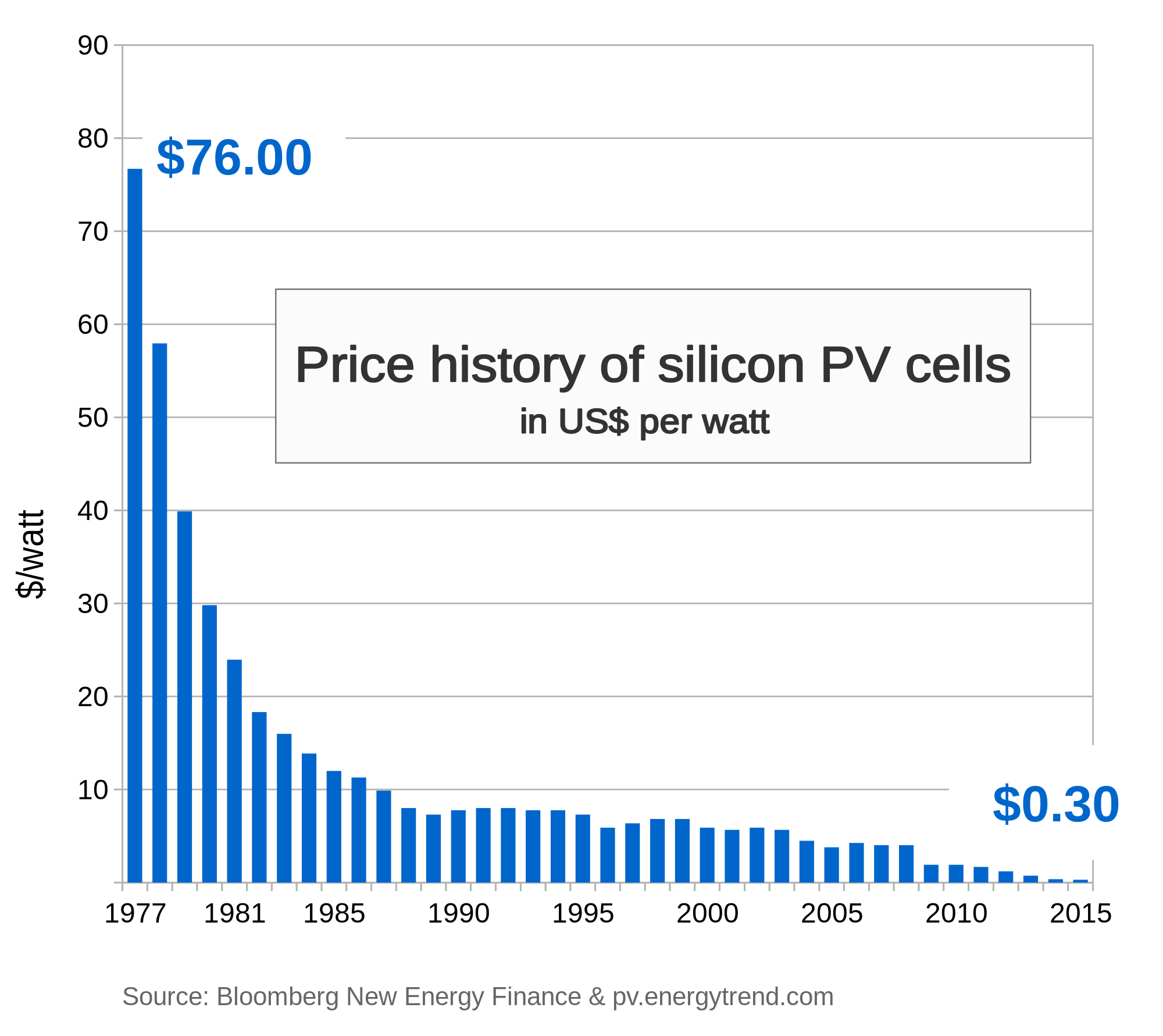

Breakthroughs are harder to measure. Looking at the curve of, say, the price of photovoltaic cells is easy to point out progress there. It's a number, the number goes down, progress happens. Done.

But progress in "science" or "technology" or the rate of "breakthroughs" is harder to measure. I didn't choose to go look for all those curves because I thought that such exercise fairly fully captures "science" or "technology", I did it because it's what is available to quantify rates of progress. At the same time I caveated that such an analysis has its limits. What would it take to say that we are living through a technological stagnation?

David Chapman recently wrote an essay criticizing the maxim that "the map is not the territory". Isn't it obvious that the map is not the territory? Well but that misses the point. Believing that "the map is not the territory" implies a potential reification of the "map" as a thing and the "territory" as another thing. The actual point that Chapman makes there is that reality is messier than it seems, it's not just that the map is an imperfect representation of the territory: there are many maps. And there is a whole set of skills needed to know what map to use that are not directly implied by the maxim. Oh and more often than not there is no clearcut territory to point to. There is no there there.

My view of the great stagnation is similar. I think the framing "great stagnation" is misguided insofar as it seems to nudge us towards a single cause and towards thinking that everything is stagnating (That's the 'great' there). All these charts showing that "something happened" in 1970 have been leveraged to point to a single event that caused all sorts of things, from a slowing down of the R&D machine to TFP growth and median GDP growth. Yet we don't see trends in technological progress slowing down in 1970, it is not that case that progress in technology and science slowed down across the board at any point in time. Yes, great stagnation doesn't mean this if one reads closely some of the authors that have written on it. They will clearly talk about TFP and GDP. These are clean, crisply defined indicators, and yes there is a stagnation there. The framing also seem to push us towards spending time doing conceptual engineering to understand that "science", "technology", "stagnation", or "progress" are. I argued in the past that progress is inherently a nebulous idea, so are the other ones. A good enough definition is enough to tell others what we mean. We can settle for that and move on.

But this, that there is indeed a TFP stagnation, does not imply that it is the R&D sector that is somehow at fault (Though it can also be that). It could be purely an institutional problem. It could be declining fertility. The science stagnation question is harder than measuring a stagnation in TFP or GDP growth. Science, unlike GDP, is not something that can be measured with a number. Countless are the times where someone has joked that we are living through a scientific golden age because more papers have been published than ever before. Of course we know that more papers does not mean more science. But what does?

One could try to come up with lists of General Purpose Technologies, the OG GPTs before GPT was cool, but ask different people what those GPTs are and they will tell you different things. Or some will say that we are just about to see the next GPT, that all the stagnation is just temporary, so nothing to worry about!.

Ultimately those of us that study progress care about the same things: We want more of the good stuff and less of the bad stuff, and we want it to be so using less inputs for a given amount of output. The good news is that achieving that goal does not require us to answer whether "there is a technological or scientific stagnation". That question may be better answered with 無.

So here's a better idea: look at specific problems and think of potential solutions for them. Not every problem can be solved, and the likelihood that something cannot be solved, coupled with its usefulness, can be used to inform resource allocation. For example it seems that faster than light travel is not allowed by physics. Maybe warp works? Probably not. Huge if true. Worth funding a handful of people to work on that. But not betting a sizable chunk of the R&D budget when there are other things to do.

What about curing cancer? Why isn't cancer cured yet? Well, there we can point to a whole host of reasons of the current field of oncology, and we could then go deeper into the biology of cancer or the funding ecosystem that supports said research. The knowledge needed to do that is very domain specific and not related to what we need to build spaceships or nuclear reactors.

Why don't we have flying cars? Is it that the batteries were too heavy and expensive? Perhaps yes, but they have been making lots of progress lately so maybe it's not that. Is it the lack of a regulatory framework? Probably; in which case the right answer is lobbying and making the case for them to the relevant regulator. Or maybe simply they are not cost-effective. Coolness does not imply usefulness. To study flying cars we need to think about flying cars, lithium batteries, composite materials, the fluid mechanics of low-noise fans, the economics of short distance flights, and their regulations. By doing this we get close and personal with the subject matter and we get away from the very high level "great stagnation" view. This all said, the proverbial flying cars will eventually come. There's Lilium, there's Kitty Hawk, there's Joby. There are many others around. It's not due to lack of trying. Obviously building flying cars is possible. In fact we had prototypes back in 1949. It is tempting to say that if flying cars were as easy as Storrs Hall make them seem we would have them already, therefore he's wrong. But that would be somewhat complacent. Instead the fair thing to do is look at why the actual efforts to build flying cars have stalled. Regulations, not physics, engineering, or economics, maybe be part of "it". Perhaps the industry tried to initially push for personally owned flying cars and that bumped against regulations; and now the emphasis seems to be on point-to-point flying taxis where the customer has no control over the vehicle, perhaps that is more to the taste of the regulators. Perhaps it's a combination of constraints: You could do horizontal takeoff winged flying cars (So you don't need to lift the weight with thrust) but then you need a runway so now you are limited to customers that live near an airport. So you need VTOLs. But VTOLs need lots of thrust, implying more power, more weight for fuel or batteries. If using fuel, you have a very hot exhaust directed towards the ground where there may be people, making it unsafe if taking off and landing in an uncontrolled (i.e. not an airport) environment, so you need to make it electric or use turboshafts. If the range provided is too short then customers may just get a regular car. Batteries have been getting better so that constraint is now being lifted. But VTOLs may be noisy as well so we needed improvements in blade geometry to reduce noise. Having multiple smaller propellers instead of a big one also helps with noise (Same principle as with aircraft turbine chevrons). Maybe a problem is just size, if you can't park near the local grocery store because your flying car is big you may decide to stick with the regular car if you can't afford to own two cars.

This discussion could of course be longer, but the point is that when you see lots of attempts taking a long time, perhaps the problem is not complacency and the domain is harder than it looks like to us who are not in the industry. Perhaps we would look like this story:

The year is 10000. Compute power has increase due to improvements to ISAs and materials to better cool processors. But feature size has been stuck at the 1nm level for a while. Somewhere deep in TSMC (Intel went broke in 2050), someone is being yelled at. "We've been on 1nm for decades now! At historical rates of progress we should be building chips under the Planck scale now! Why are you so slow!" "Sir, for the nth time,

I'm the janitorfundamental physics prevents us from continuing historical trends from the XX and XXI centuries. Can't do that! We are focusing on producing better coprocessors and increasing power efficiency in other ways" "Why such complacency! Just keep trying!" And trying they kept, as they had for the previous millennia.

Rampant optimism is a recipe for constant disappointment and self-flagellation. Just because complacency is bad doesn't mean we should be making unrealistic demands of builders or reifying historical trends and demanding that they continue into future. Even Boom Aerospace, entrepreneurial startup mentality and all, is taking as long as traditional aerospace companies to get their aircraft in the air. Is it reasonable to head down to Denver, Colorado and yell at Blake Scholl "Dude, why so slow!?"? Of course not.

It is only by coming down from the narratives and abstractions into the concrete and specific that we can start to think of concrete domain-specific actions, roadmaps, or bottleneck analysis that could be done to make more progress in problems that matter. This is I think the right way to think about the stagnation. Leave the big picture aside, and do a root cause analysis for why the world is not as awesome as it should be right now. If in the process of doing that we discover a single root cause (Is society as a whole getting complacent?) great. But it's unlikely that just that is the cause, or even the main cause, of less-than-maximal rates of progress in every single field, including those are are living through an actual golden age.

Addendum: What if something happened in 1970?

Something could have happened in 1970. That is not something I reject. Suppose, for the sake of the argument that this one thing was going off the gold standard. Or something about oil. Suppose then that this is the cause of the economy wide slowdown in TFP (Which is real); that is, that it could be that even though the science engine is running fine, the transmission link between that and the rest of the economy has broken down. Or that the 1970 shock induced indirectly a worsening of institutional quality that in turn leads to, say, rising housing prices via misguided urban planning. Would that invalidate this article?

I don't think so. Above I granted that there is a great stagnation in TFP and GDP. I questioned that such a stagnation is a technological stagnation across the board and than rather to try to figure out if it is or not, we should go case by case and see why things go fast or slow. For the actual great stagnation, one could do an analysis of why, at the economy level, GDP growth slowed down, and maybe one finds a single cause of many. This grants that the stagnation is not great in the sense that everything is slowing down (Hey, some technologies are doing well!) and puts our focus on the concrete things that are going bad, and perhaps contrasting the accelerated sectors with the decelerated ones helps tease out why the latter are how they are.

Dietz Vollrath attempted this and found a series of causes that, in his view turn out to be hard to fix. Hard is not impossible. If he says it's fertility rates going down I say aim for one billion Americans, and if he says it's an aging population I say aim for longevity research. Maybe Dietz is wrong. Maybe there was a monocausal event in 1970. Maybe I should write Nintil's take on what happened in 1970, that chart looks the way it does for a reason, presumably, and we can go and find out.

Comments