Consciousness and its discontents

Here I present some thoughts about consciousness, especially arguing against the idea that consciousness is somehow not real, or an illusion.

Descartes was the famous originator of cogito ergo sum. He argued that even if your senses were deceived, you could still be sure that you were conscious. And so consciousness would be the Tier 1 truth amongst the beliefs we hold. This is indeed the case for me, at least.

Consider the following set of things: quantum field theory, the theory of relativity, neuroscience, cognitive science, evolutionary theory, external world realism, physicalism, naturalism, and materialism.

Now here there is another thing: consciousness.

I'd rather bin and commit to the flames the first set of things rather than give up consciousness as a real thing. Given that I just said this, one could think that I should not debate the issue further, as it would be pointless to change my mind. But this would be not true. My mind can still be changed. So I, as a fallible being, can in theory be mistaken in assigning something a prior resembling a Dirac's delta. But I'll argue that this is not the case, and that I am not mistaken.

Note that I have not said that rejecting consciousness amounts to rejecting the things in the first set. There is nothing incoherent in accepting eliminativism except for its conflict with consciousness. So if one rejects just that, one need not reject any further beliefs in the mentioned sciences.

Some methodological notes

How are we justified in believing things? The best answer I have seen is a form of foundationalism: We are justified in believing in P if it seems to us that P, and there are no defeaters for our belief in P (Huemer 2007, Moretti 2015). In this framework, as an example, we would deal with the Müller-Lyer illusion by saying that it seems to us that one arrow is bigger than the other but that we have a defeater for our seeming: that we have measured the arrows. And so we would not be justified in believing that the arrows are what they seem. In doing this, we have appealed to a more solid seeming: that the ruler is straight, that our eyes made accurate judgements when measuring the arrows, etc. With consciousness, the default position is that we are indeed conscious and that consciousness is real. But are there defeaters for that position? As I mentioned above, to dislodge the belief in consciousness realism, we need a stronger premise than 'I am conscious', and there are no such premises. The eliminativist, of course, disagrees.

One could instead take a coherentist approach, and use coherence as a criterion of truth. If one does this, one may find that eliminativism coheres very well with science and that consciousness realism is more of an issue, a dangling isolated thing away from a big cluster of interconnected beliefs about the physical world. I am no fan of coherentism, but one need not be committed to eliminativism if one is a coherentist. Perhaps it could be argued that, nonetheless, consciousness is tightly linked to every other belief via the fact that to have beliefs we have to be conscious.

The Hard Problem

Michael Huemer's exposition of the mind-body problem will serve as a good introduction to the issue that I want to discuss

Consider the following five tenable theses:

| 1. | For any system, every fact about the whole is a necessary consequence of the nature and relations of the parts. | | 2. | People are made of atoms. | | 3. | Atoms are purely physical objects, with nothing but physical properties and physical relations to one another. | | 4. | People have mental states. | | 5. | No statement ascribing a mental predicate can be derived from any set of purely physical descriptions. |

Different positions regarding consciousness and its relation to the brain will accept of reject some of these. All of these cannot be held at the same time, but all of them seem plausible. Premise one essentially is a statement of ontological reductionism, which seems true to me. Premise two and premise three is what we know about the universe (Substitute for fields, quarks, etc). Premise three seems true by introspection. Premise five can be taken to mean:

In (5), "derived" means "logically derived," that is, derived in the sense in which the fundamental theorem of calculus can be derived from the axioms of arithmetic and some definitions. It does not mean "caused". This means that it would not be possible to deduce the quality of someone's conscious experiences from a physical description of him or of anything else. If a physical description of the universe is given, it will always be an additional piece of information, e.g., to note that someone is in pain. [...]

Finally, there is (5). What reason is there for thinking that is true? Well, we can compare it with a number of similar principles to get the general idea. In moral philosophy, there is a principle sometimes called Hume's law that says it is not possible to derive a normative judgement from a descriptive judgement. A normative judgement is a judgement about what is good or bad, right or wrong, and a descriptive judgement is basically anything else. Another way this is stated is that you cannot deduce an "ought" from an "is": you can't derive what ought to be the case solely on the basis of what is the case. This principle is almost universally acknowledged. And it is merely part of a more general pattern. For example, you can't derive a statement describing distances from any set of statements that don't describe distances. You can not derive a statement about colors from any set of non-color statements. You can't derive geometrical statements from non-geometrical ones. And generally, if you have an inference in which the conclusion talks about one thing and the premises talk about something else, the inference is invalid. In the same way, it is a conceptual truth that you cannot derive a mental description from a physical description. After all, just consider some physical concepts, such as spatial/geometrical properties, mass, force, and electric charge. Is it plausible that there is any way that these concepts could be used to explain what it feels like to be in pain? Say whatever you like about masses, positions, and forces of particles, you will not have ascribed any mental states to anything.

Eliminativists will deny 4. Cartesan dualists will deny 2. Idealists will deny 2-3. Property dualism might deny 1 or 3 and the mind/brain identity theory could deny 1-5. Panpsychists reject 3.

Some people say that deciding what systems are conscious is up to us. This does not run counter to premise five. Saying things like "I consider as conscious systems that exhibit such and such external behaviour, that are internally constituted in such way, and that have a certain degree of Phi" include a reference to consciousness in them. I disagree with this proposal, but it will still amount to the rejection of one of the premises.

How mind relates to brain

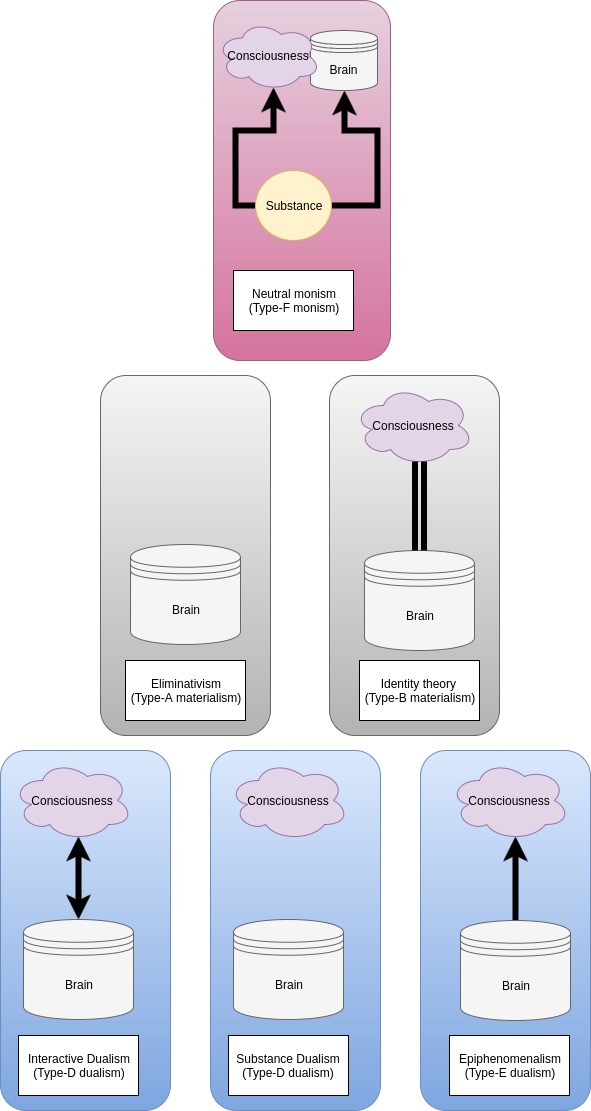

What are all the possibilities? Here's a handy chart, adapted from Chalmers (2003)

(Idealism is deliberately absent from the table. Substance dualism is there but is not further discussed)

The summary for these positions is something like this (Diagram not arranged in order of plausibility):

- Type-A materialism: Consciousness doesn't exist

- Type-B materialism: Conscious states are brain states. When we say 'I see yellow' this is a poetic way of saying 'Such and such neurons fired in such way'

- Type-D dualism: Consciousness exists, interacts with the physical.

- Type-E dualism: Consciousness is a property of certain physical systems (maybe of certain complexity, composition, etc), but consciousness is causally impotent over physics

- Type-F neutral monism: Mind and matter are both aspects of a third substance.

The materialist dance

So let's suppose that we want to grapple with the problem above, and we want to accept consciousness. What would a sensible materialist say? Indeed, one can achieve peace of mind by embracing eliminativism at the cost of denying the obvious, or one can remain in a state of uncertainty and keep belief in consciousness. By the materialist dance I mean the switch in positions that may happen to one as one considers possible explanations for consciousness.

So we begin pondering the idea of consciousness. We know things about physics, and we don't believe or want to believe in anything weird or spooky. We also want to keep our belief in consciousness, so we begin by rejecting eliminativism.

Then we think hard and come up with the idea that consciousness is really a poetic name for certain patterns of brain activity. This is the identity theory. It doesn't require any modification to physics, and it allows one to say that consciousness is real. Nice, isn't it?

Sean Caroll in his last book, The Big Picture, defends this view.

If there is any one aspect of reality that causes people to doubt a purely physical and naturalist conception of the world, it’s the existence of consciousness. And it can be hard to persuade the skeptics, since even the most optimistic neuroscientist doesn’t claim to have a complete and comprehensive theory of consciousness. Rather, what we have is an expectation that when we do achieve such an understanding, it will be one that is completely compatible with the basic tenets of the Core Theory—part of physical reality, not apart from it.

Why should there be any such expectation? In part it comes down to Bayesian reasoning about our credences. The idea of a unified physical world has been enormously successful in many contexts, and there is every reason to think that it will be able to account for consciousness as well. But we can also put forward a positive case that the alternatives don’t work very well. If it’s not easy to see how consciousness can be smoothly incorporated as part of physical reality, it’s even harder to imagine how it could be anything else. Our main goal here is not to explain how consciousness does work, but to illustrate that it can work in a world governed by impersonal laws of nature.

In this chapter and the next we’ll highlight some of the features of consciousness that make it special. Then over the following few chapters we’ll examine some arguments that, whatever consciousness is, it has to be more than simply a way of talking about ordinary matter in motion, obeying the conventional laws of physics. What we’ll find is that none of those arguments is very persuasive, and we’ll be left with a greater conviction than we started with that we human beings are part and parcel of the natural world, thoughts and emotions and all.

In the process of the discussion, Carroll is forced to admit some weird things: For example, that the Chinese room is conscious, or that a giant army of people passing messages to each other, simulating the brain of some, is also conscious (as in, there being something conscious above the individual consciousnesses of the individuals)

Imagine mapping a person’s connectome, not only at one moment in time but as it develops through life. Then—since we’re already committed to hopelessly impractical thought experiments—imagine that we record absolutely every time a signal crosses a synapse in that person’s lifetime. Store all of that information on a hard drive, or write it down on (a ridiculously large number of) pieces of paper. Would that record of a person’s mental processes itself be “conscious”? Do we actually need development through time, or would a static representation of the evolution of the physical state of a person’s brain manage to capture the essence of consciousness.

These examples are fanciful but illustrative. Yes, reproducing the processes of the brain with some completely different kind of substance (whether neuristors or people) should certainly count as consciousness. But no, printing things out onto a static representation of those processes should not.

From a poetic-naturalism perspective, when we talk about consciousness we’re not discovering some fundamental kind of stuff out there in the universe. It’s not like searching for the virus that causes a known disease, where we know perfectly well what kind of thing we are looking for and merely want to detect it with our instruments so that we can describe what it is. Like “entropy” and “heat,” the concepts of “consciousness” and “understanding” are ones that we invent in order to give ourselves more useful and efficient descriptions of the world. We should judge a conception of what consciousness really is on the basis of whether it provides a useful way of talking about the world—one that accurately fits the data and offers insight into what is going on.

As poetic naturalists, that’s basically what we’ll be doing. The attributes of consciousness, including our qualia and inner subjective experiences, are useful ways of talking about the effective behavior of the collections of atoms we call human beings. Consciousness isn’t an illusion, but it doesn’t point to any departure from the laws of physics as we currently understand them.

According to poetic naturalism, philosophical zombies are simply inconceivable, because “consciousness” is a particular way of talking about the behavior of certain physical systems. The phrase “experiencing the redness of red” is part of a higher-level vocabulary we use to talk about the emergent behavior of the underlying physical system, not something separate from the physical system. That doesn’t mean it’s not real; my experience of redness is perfectly real, as is yours. It’s real in exactly the same way as fluids and chairs and universities and legal codes are real—in the sense that they play an essential role in a successful description of a certain part of the natural world, within a certain domain of applicability.

The idea that our mental experiences or qualia are not actually separate things, but instead are useful parts of certain stories we tell about ordinary physical things, is one that many people find hard to swallow.

You can also insert here Daniel Dennett's view.

On this view, consciousness is not really real, but merely real. Isn't this like eliminativism? Caroll doesn't really answer in the book. But a charitable interpretation would be something like "Yes, sure, consciousness is not real in the strong sense, but we can still tell stories about it and talk about certain parts of the universe using that word". Compare, in ethics, the position of the ethical relativist: "Yes, sure, ethics is not real in the moral realist sense, but it is useful to say that this or that is right or wrong as a shorthand for 'society thinks so'". This in the end amounts to eliminativism and nihilism, respectively, if only sugarcoated with poetry. Poetic naturalism doesn't attempt to hide this: it even has poetic in its name. But when pressed, the poetic naturalist, or the identity theorist, will have to concede that on the common meaning of real, consciousness is not real for them. There are no qualia, no thoughts, no feelings, no beliefs. Only interactions between neurons, and nothing else.

But then, we are all philosophical zombies. Choosing to call things in different ways does not change the way fundamental reality is. Calling a spoon a fork does not make it into a fork. Saying 'the movement of atoms in this way' is consciousness does not make it so (in the realist sense). And so zombies would then be not only something conceivable, but actually actual! Indeed Brian Tomasik, an eliminativist, when asked if he believes that we are all zombies, he says yes, we are.

This might seem hard to swallow, so one then may turn to epiphenomenalism. Here we see the familiar stories of 'The brain is a complex system blah blah' or 'Consciousness is a special thing that certain biological systems do, and that cannot be replicated in silico just by replicating its functioning' (Searle and Pigliucci). I used to believe this.

If we do this, we have abandoned materialism, and entered dualism. Some people might protest and insist that epiphenomenalism is still materialist, but in academic philosophy of mind, materialism means type-A or type-B theories. So what?, one might say.

Well so it happens that if consciousness is epiphenomenal, then what causes me to talk about consciousness is not consciousness. Consciousness is not causing anything if we subscribe to epiphenomenalism. Eliezer Yudkowsky makes the argument here. This leads to the weird situation where my zombie would be writing the same things as I write, but without being conscious. Wouldn't that be weird? Why would my zombie be talking about consciousness if it is not conscious? Equally, if consciousness is not causing me to write about consciousness (in epiphenomenalism, causality runs only in one direction), then why am I writing about consciousness?

I wrote an answer to the inconceivability of zombies here, but epiphenomenalism is still a troubling view. A universe of zombies that do everything we do, but are all eliminativists about consciousness is something I can conceive. But a world like ours where everyone does the same seems to lead us to believe that what we do regarding consciousness doesn't have to do with it. I think that I write about consciousness because I am conscious. But maybe I write about consciousness because I can't help it, and in addition I am deluded: assuming determinism, my mind is only a camera that thinks mistakenly it is causing things, but it actually is not. That would be an illusion. If so, it is not that weird. Assuming the 'everyone is a mechanical robot view', where we step back and see everything as the raw laws of physics doing their job, I can see myself as a body being pushed around by physical forces and stimuli, writing stuff, while there is this camera that is my mind looking at everything. Of course, being a passive spectator is boring, so it forms beliefs that it is actually in charge. I am not endorsing it, just showing how could it be that consciousness is not causing my words, and thus how zombies are conceivable.

But let's say that we don't like the epiphenomenal explanation because we are convinced that consciousness does something, like I (and Eliezer) seem to do. Then we jump into the interactionist dualist ship. We are then immediately faced with the QFT argument. Sean Carroll again:

On meter-sized scales, relevant to bending a spoon with your mind, the strongest possible allowed new force would be about one billionth the strength of gravity. And remember, gravity is far too weak to bend a spoon.

That’s it. We are done. The deep lesson is that, although science doesn’t know everything, it’s not “anything goes,” either. There are well-defined regimes of physical phenomena where we do know how things work, full stop. The place to look for new and surprising phenomena is outside those regimes. You don’t need to set up elaborate double-blind protocols to pass judgment on the abilities of purported psychics. Our knowledge of the laws of physics rules them out. Speculations to the contrary are not the provenance of bold visionaries, they are the dreams of crackpots.

While he argues against telekinesis, one could extend the argument to consciousness. If consciousness causes stuff, then we should be able to detect that force. According to a handy chart at wikipedia, gravity is extremely weak compared to other forces to begin with at small scales, and so something even weaker would have a negligible effect on the brain, swamped by other forces. But still, this doesn't rule out that possibility. A minuscule force, or a consciousness-field might be all that is required! Implausible is not impossible!

Note that Carroll says that:

any new forces that might be lurking out there are either (far) too short-range to effect everyday objects, or (far) too weak to have readily observable effects.

And we might only need tiny forces acting on ions or atoms in the brain.The interactionist might want then to look at proposals like Bohm's, Penrose's, Popper's or Stapp's that propose some sort of quantum effect mediating consciousness (the infamous 'Quantum mechanics seems weird, and so does consciousness, maybe they are related'). But this may sound too spooky to some ears, and so interactionism is another view that is abandoned in the materialist dance.

So we then turn to neutral monism. Out of the possible solutions to the conundrum of consciousness, it is the least obvious to understand. My understanding of its motivation is this: Imagine the universe filled with balls moving around. Arrows between the balls, the laws of physics, describe the interactions of the balls. But what are the balls? What is their intrinsic nature? Or to use David Pearce's favourite expression, what breathes fire into the equations?. And so inside those balls, we find phenomenal properties. Everything would be have a tiny bit of consciousness in it: panprotopsychism. This view preserves the laws of physics as they are, keeps the belief in consciousness, and doesn't appear to run into the 'Nothing causes my writings on consciousness' problem, as it claims that physics is consciousness, not an epiphenomenon.

To get your head around it, the idea here is that if we say that the universe is made of fields, those fields are ultimately fields of phenomenal experience (yes, it still sounds weird). Your head is physically implementing some very complex calculation in a very specific way, and there is something that feels like to be that calculation, being implemented and 'unfolded by Nature'.

Brian Tomasik has a reply to this here. Basically, what would be wrong with neutral monism is that, as it leaves the laws of physics unchanged, still cannot causally trace our belief in consciousness to consciousness itself. A second problem is that the micro-particles of consciousness still have to be aggregated into a whole unified consciousness (combination problem).

If this still seems weird to you, you are out of luck. So you might then consider that your initial supposition, that consciousness is real, is perhaps misguided. And so you begin to consider eliminativism.

The argument for eliminativism

You might think something like science gets things right, and human beings are biased and make mistakes when thinking, and maybe it is more likely that this great edifice of science is right than you are about this spooky conscious thing. Plus consciousness seems like something really removed from your network of beliefs about nature! One can picture a sort of causal graph with everything in nature connected to everything by the forces of nature so that you get nice bidirectional interactions... and then there's this thing that you don't know where to put. Maybe there is no thing! In the past we have wondered if there was something magical about life, and we thought that there was this elan vital that made the difference between inanimate and alive, and now we know that it was atoms and their interactions all along. Consciousness will turn out to be the same thing, another elan vital that, if anything, is more intuitive than the original.

...

...

Cogito ergo sum tho. And given that consciousness is obvious to us, the eliminativist bears the burden of proof. Thus, the eliminativist then tries to find arguments against the 'I feel conscious' premise.

Critiques and countercritiques of realism

Rob Bensinger posted recently an article , the immediate cause of me writing this, where he tries to do that.

Bensinger considers a series of arguments. Here I will summarise the argument he is replying to, then his response, then mine. (A is argument, R is Reply, C is counter reply)

A1: There is no seeming without phenomenal properties, we can't seem to be conscious yet not be. If something seems something to us, we might be mistaken about the content of the seeming, but not of the seeming itself.

R1: Think visual illusions. They seem one way, but they aren't. It perceptually seems one way, but doxastically seems another way (We don't believe what our eyes show us, we believe what we have determined to be the actual case, e.g. that the lines are equal in the Müller-Lyer illusion). We can have the perceptual seeming that we have phenomenal states, but we can correct that intuition, and see consciousness as an illusion still. And I (Rob) deny that if you doxastically instantiate a property you therefore instantiate it, that is, your belief in consciousness does not mean you are conscious. There is a case where a woman is told she will be touched with an ice cube, but is touched by a hot poker instead. It might seem to her that she feels a burning sensation, but she might be instantiating cold qualia.

C1: I would interpret the perceptual/doxastic distinction differently. I would say that we have a seeming that X, and then there are defeater for the seeming. A belief would be something justified by, but different from, the seeming. In both cases, having belief or seemings are mental properties, which require qualia. If one has a seeming, one is then conscious. The hot poker example just proves that we can be confused about the contents of consciousness, not about having consciousness in the first place. This is not to deny that we cannot be confused about having consciousness itself: Daniel Dennett and other eliminativists are in such state of confusion. To put it in another way, this is like trying to argue against consciousness by arguing that any of our mental states might not track reality because a laplacian demon might be misleading us. But there is still a conscious thing being deluded in that scenario!

A2: (Looks very similar to A1)

R2:

However, once it is granted that both doxastic and perceptual error is possible in the absence of the phenomenal, I see no principled way to rule out the possibility that zombies are capable of quasiperceptual metarepresentations as well, including erroneous ones. And such metarepresentations, I believe, do hold some promise of explaining the apparent presence of phenomenal properties in our stream of consciousness, as opposed to ignoring or flatly denying this pre-doxastic semblance.

This is something that I deny, of course. Zombies cannot make doxastic errors, as knowledge as commonly understood requires consciousness (cf. Searle). About what zombies can or cannot do, it seems weird for an eliminativist to talk about zombies that way: after all, we are all zombies to begin with (according to Brian Tomasik at least), so if we are capable of quasiperceptual metarepresentations, zombies are. But the argument still seems confused: there is still talk of phenomenal properties in a stream of consciousness, and I insist that appearence of phenomenal properties refutes eliminativism.

A3: The eliminativist rejects a piece of data to be explained (consciousness), and says we are zombies! This is not consciousness explained, it is consciousness explained away! R3: Yes, I deny some of the data. But sometimes we don't have the data we think we have, take my arguments more seriously

C3: I'm taking them seriously... am I not?

A4: That I am conscious is something we know directly, we do not infer it from anything else. Thus it cannot be wrong

R4: Optical illusions tho

C4: I agree with this argument. Seemings are not inferred, but can be defeated.

A5: Knowing that we are conscious does not come from the representation of the perception of said knowledge, but we are acquainted with the phenomenal directly. Thus it cannot be an illusion, unlike with perception.

R5: Yes, it seems like that, but I reject it on theoretical grounds. What we call consciousness _seems(!) _to serve the purpose of self-modeling, and an artifact or side-effect of this is the belief in consciousness. If I can't argue this, it is because argument 7.

C5: It could well be that some processes serve to represent a self, but we can't have a false belief if we are not conscious. We couldn't have any belief at all. You could say that what happens is that, as we are zombies, our brains generate this weird speech and behaviour that seems to suggest phenomenal properties, but there is no such thing. That would be coherent with eliminativism - but false, as we do have beliefs-.

A6: Our perception cannot misrepresent the world, as they don't carry any evaluation. Only beliefs can misrepresent the world. So A2 and A4 again!

R6: I disagree, non-doxastic states are not always true, etc

C6: Not very relevant regardless of the truth of the argument. What counts is whether the belief is right or not, not if seemings or appearences can have doxastic valuation.

A7: The fact that we are conscious is self-evident, beyond any doubt. No argument is more obvious than such strong seeming

R7: It is possible to be mistaken about whether we are conscious or not. There are consciousness realists and anti-realists. At least one camp is wrong.

I will also grant that these properties quasiperceptually (and doxastically, prima facie) seem manifest, self-evident, pretheoretically obvious, infallibly self-revealing. But just as I denied that we really do phenomenally seem to have phenomenal properties, I also deny that the phenomenal really is manifest. I grant a watered-down semblance, and seek to explain it. But I do not grant as much as the realist wishes. [!!!!???]

There are defeaters for the initial intuition: physicalism and the fact that consciousness cannot be reduced to physics. Plus, infallible knowledge looks problematic. We have been mistaken about the dynamics of our memory, reasoning, perception, imagination, and other mental faculties. It is implausible if such an evolved complex and kludgy system as the human brain evolved the capacity for infallible knowledge.

C7: Even if knowledge of consciousness is not infallible, it is still more plausible than the defeaters.

A8: Seems like the previous arguments

A9: Seems like the previous arguments

A10: Seems like the previous arguments

A11: Knowledge requires consciousness. I couldn't know that I am a zombie if I am not conscious.

R11: Implausible that functional states cannot justify (ground knowledge), as if so, zombies would lack warrant for any belief.

C11: Not implausible. Indeed it is the case. Warrant cannot be had for that which is lacked. Zombies have no consciousness, beliefs, warrants, thoughts, seemings, or perceptions in the strong sense. Of course, one can be poetic and talk about non-conscious perception or non-conscious beliefs (as in 'AlphaGo knows that this board position is better than that board position'), but here we don't like poetry, we are more into hard, cold, truths.

A12: Most knowledge requires consciousness. Zombies lack warrant for most of their beliefs. My knowledge that, say, tomatoes are red depends on be being conscious of it. The beliefs that ground eliminativism could be defeated if consciousness is rejected.

R12: Phenomenal properties are not important to our everyday beliefs. What matters about the tomato producing the quale(!!!!!!!) is that it produces the same quale as us seeing red signs, etc. If we had inverted qualia, they would work just the same. So that knowledge does not depend on qualia.

R12: Imagine qualia are bridges. You can have bridges built of wood or steel, but you need bridges to get external phenomena into your awareness. Recall, the reason you are doubting realism and embracing eliminativism is that at some point, some facts entered your awareness and you pondered them. Surely, one could argue, if epiphenomenalism is true, things are more complicated.

A13: Mind require consciousness. Saying 'I believe in eliminativism' or 'I've seen this article' depend on phenomenal states. What eliminativists do when doing that are performative contradictions

R13: I am being poetic when I say all of that.

C13: Fine. But it is confusing! Don't do that. I wouldn't do it. I'll discuss this later (in another post) when I discuss tomapsychism, the triple conjunction of eliminativism, panpsychism and identity theory.

A14: Intentionality requires consciousness (I don't think this is very relevant given what has already been said, skipping)

A15: That many people can't be wrong!

R15: Flat earth, pre-relativistic physics, etc.

A15: Agree.

A16: Seems like the previous arguments*

A17: We can't conceive of ourselves as zombies

R17: You do it all the time, you feel like a zombie because you are one.

C17: ...

A18: Seems like the previous arguments

A19: Succumbing to an illusion like this seems maladaptive. Why does our brain makes this error?

R19: Evolution can also be used against consciousness: why did consciousness evolve? And maybe the cognitive error is not too great. Maybe it is a spandrel.

C19: I agree that the argument from evolution can cut both ways, and that A19 fails in so far as it can be a spandrel, or maybe it is adaptive for other purposes (Robert Trivers has argued that self-delusion can be useful)

A20: If consciousness does not exist, then meta-ethical nihilism follows. This can't be!

R20: Perhaps we could reconceive ethics? In the same way that we can say that machines 'believe' things, we can say have concepts of good, bad, suffering, or happiness that do not rely on consciousness.

C20: This looks like poetry and sugarcoating. But I don't like argument A20. If eliminativism implies nihilism, that is no problem. Nihilism is not epistemically impossible or anything like that.

I suspect that a number of my responses will not feel very satisfying to those who found the objections initially plausible. In a number cases I do little more than say, ‘Your premise, if true, would refute my view; but I can consistently affirm that your premise only seems true.’ Such arguments by their very nature can’t get eliminativism off the hook. They neither dispense with the semblance nor explain it—not in robust cognitive detail, at any rate.

So it seems indeed.

Yudkowsky's argument, again

I now take a closer look at Eliezer Yudkowsky's argument against epiphenomenalism. Recall that in the post linked above that I wrote, I argue that his argument does not refute zombies. But does it refute epiphenomenalism?

His argument, in a nutshell is saying:

- Consciousness seems to cause stuff like us yelling if we feel very hot temperatures (Our awareness of the qualia is the cause of the yelling)

- In order to know about something, you must be in causal contact with it

- If epiphenomenalism is right, then the reason why we talk about consciousness has nothing to do with the fact that we are conscious

- Chalmers postulates consciousness as a separate reason but makes it explicit that (in epiphenomenalism) there is a separate reason why we talk about consciousness (3). Why postulate consciousness then? If you postulate it, at least make it possible that it does something (interactionism)

I agree with point 1 and point 3. Point 4 is problematic because consciousness is not postulated but assumed as a piece of data to be explained. Epiphenomenalism is then proposed as an explanation that leaves physics as it is.

Point 2 could be more problematic. I know some properties about objects without having been in contact with them (If A is bigger than B and if B is bigger than C, then A is bigger than C, for example). Imagine there is a rock inside a box inside a house in a planet outside of our light-cone, so I cannot possible have come in contact with it. Still, I would know something about it. But one could then argue that such thing only is knowledge of relations between things, not of properties of things. Admittedly, epiphenomenalism doesn't look like this, so it is not trivial to work around the lack of causal effect of consciousness.

But still, it looks weird. The belief that consciousness does something could be explained away as an illusion without much trouble (defeating the seeming of point 1). But then the reason we say "It seems to me that I am conscious" has nothing to do with the fact that "It seems to me that I am conscious". Recall, our body is going around fully guided by the laws of physics (under epiphenomenalism) and does not know anything about consciousness by definition (Both no causal contact and no mental states in the brain separate from consciousness). How comes this body is talking and writing about a property it has no reason to believe in? And why does it then turn out, magically, that it does indeed have that property? I mean, you could argue that for whatever reason there is a widespread cognitive failure that makes advanced mental architectures outputs stuff about something. But how comes this random something actually exists! It seems as likely as saying that a flying magical unicorn exists and is pink and epiphenomenal. But then how could you possibly argue that such a thing exists? You couldn't say that the unicorn tells you so, because it is causally impotent. So if you say that it exists and you are asked why, you cannot give any reason. And if it turns out that it does exist, it would be a miraculous coincidence. Same applies for consciousness. There might be a way of recovering epiphenomenalism, but the argument is indeed quite devastating. Chalmers himself seems to have moved away from it in the recent years.

What do I think about...

- Mary's room: Mary does learn something new when she sees red. She cannot know that just by reading neuroscience

- The Chinese room: The room is not conscious, and does not know chinese. Same for a replica of a brain built of people passing messages around

- Zombies: Are conceivable and possible, assuming that physicalism is an empirical (a posteriori) truth as is commonly believed. If eliminativism is true, we are all zombies.

- Artificial Consciousness: Possible, with the proper architecture. We can replicate anything living beings do, why not consciousness?

- What theories do I think are probably true, with probabilities, as of today:

- Neutral monism/Panpsychism(60%)

- Interactionist dualism(30%)

- Epiphenomenalism(10%)

- Idealism(~epsilon%)

- Non-interactionist dualism(~epsilon%)

- Identity theory(~0% as it rejects consciousness as real)

- Eliminativism(~0% as it rejects consciousness as real)

To finish, I quote Tomasik:

So we have a dilemma:

- conclude that the zombic hunch is one example of many in which our brains' intuitive reasoning fails, or

- maintain the zombic hunch, throw a monkey wrench into an otherwise basically flawless physicalist picture of nature (minus the mysteries of the origin and fundamental ontology of the universe), and insist on a conception of consciousness for which any explanation appears inadequate!

The choice seems clear to me. (Tomasik, 2017)

Indeed! Option 2 all the way! :)

Final words

As times goes on, we will have more information about how the brain works, and whether there are or not any special forces that might mediate the interaction between the mental and the brain. Hopefully that might help solve this problem. Currently, the debate between eliminativists and realists is a bit hopeless. I admit that it eludes me how it can be that there are people who disbelieve in consciousness. Surely those must be insane minds, but eliminativists are far from insane, they are clever and thoughtful. Seeing both sides, I always see the comments and arguments of people like Graziano or Dennett as missing the point, or begging the question. They probably think the same of the realist. But it must be like that for a conflict over such a basic intuition. Typically, we would use shared intuitions to agree on uncertain propositions. But here, even if we agree on almost everything, we cannot fix this disagreement as easily.

One final thing that I will say is that if you have any further idea about this, please do tell me. Also, if you assume that consciousness obviously works in way X and that I am stupid for not seeing it, you are probably wrong, though I would be glad if you are actually right. I'm guessing that most educated readers will feel close to epiphenomenalism and will try to defend it and make it sound more plausible. I have not devoted here enough time to all of the views because the point was not to clarify what is the answer, but what is not. I end this remarking that my intention has not been to say 'I have solved this, here's the answer', but more like 'Help continue the work of understanding that which enables understanding itself, but please accept that there is such a thing to begin with'.

Feel free to leave interesting links, books, and papers in the comment section.

Appendix: Summary table

| Theory | Is consciousness really real? | Is consciousness merely real? | Zombies possible? | Advantage | Disadvantage | | Eliminativism | No | No | Yes, we are all zombies | Preserves physics | Dismisses consciousness | | Identity theory | No | Yes | No, consciousness is function | Preserves physics, sounds nice | Dismisses consciousness | | Epiphenomenalism | Yes | N/A | Yes | Preserves physics and consciousness | Consciousness causally impotent | | Interactionist dualism | Yes | N/A | Yes | Preserves consciousness, gives it causal role | Requires modification of physics | | Neutral monism | Yes | N/A | Probably yes | Preserves physics and consciousness, gives it causal role | Unclear if this is really a different view or really a form of dualism. |

Comments from WordPress

David Pearce 2017-04-16T12:18:12Z

Artir, thanks. Billions of words have been written on the problem of consciousness. One can’t read them all. However, one can read in a weekend all the theories that make novel, empirically falsifiable predictions. Like many people, I don’t really understand how Orch-OR explains consciousness or phenomenal binding - or indeed the prowess of Penrose-class mathematicians. This doesn’t matter because Penrose/Hameroff and their critics can agree. The failure of interferometry to find the slightest collapse-like deviation from the unitary Schrödinger dynamics will falsify Orch-OR.

Likewise with non-materialist physicalism. I suspect most people will find e.g. the implication that consciousness is c. 13.8 billion years old, or that superfluid helium is a macroscopic quale (and so forth) desperately implausible if not nonsensical. It’s an intuition I share. However, when the non-materialist physicalist says that e.g. one’s experience of a phenomenally bound perceptual object consists in a coherent neuronal superposition (rather than synchronous firing) of distributed feature -processors, this isn’t just a bizarre “philosophical” opinion. It's an independently falsifiable empirical prediction - regardless of how insane it sounds. Molecular-matter wave interferometry cannot lie. I originally drew my calculations of credible decoherence timescales in the CNS from quantum mind critic Max Tegmark – who regards them as the reductio ad absurdum of quantum mind, not a test. . A radical eliminativist about consciousness can respond that a Popperian plea for novel falsifiable predictions is unreasonable. No phenomenon exists to be explained! Here I’m a boring consciousness realist. Anything above-and-beyond the first-person contents of my own mind is a theoretical inference to the best explanation. Take away my “raw feels” and there’s nothing left – not even an introspective void...

Artir 2017-04-13T07:47:11Z

So it seems I'm not getting more comments. I will write a reply next week.

David Pearce 2017-04-15T18:58:06Z

Let’s assume physicalism: no “element of reality” is missing from the mathematical formalism of (tomorrow’s) physics: quantum field theory or its generalisation. Does reality consist of (1) fields of insentience (eliminativist materialism)? (2) fields that are sometimes sentient and sometimes insentient? (3) fields of sentience (non-materialist physicalism)?

Non-materialist physicalism (3) is sometimes with conflated with property-dualist panpsychism or Russell’s neutral monism. But non-materialist physicalism isn’t the claim that primordial experience is somehow attached to physical properties at a fundamental level (i.e. property-dualist panpsychism), or the claim that the “fire” in the equations is neither physical nor experiential but an unknown tertium quid (i.e. Russell’s neutral monism: https://www.amazon.com/Consciousness-Physical-World-Perspectives-Russellian/dp/0199927359). Rather, non-materialist physicalism proposes that phenomenal experience is the intrinsic nature of the physical that the formalism of QFT describes. Your own conscious mind discloses the “fire” in the equations! We might perhaps call this position “idealistic” physicalism: the entire mathematical machinery of physics gets transposed to a subjectivist ontology. But the term “idealism” has too much philosophical baggage. Critics are likely to confuse such a label with anti-realism, or Bishop Berkeley, or the German idealists, or the claim that “consciousness collapses the wave function” (etc): I’ve never collapsed a wave function in my life.

This brings us to the phenomenal binding/combination problem. The binding problem sounds very bad news for non-materialist physicalism: the seeming impossibility of a solution drives David Chalmers to dualism. On the contrary, it’s very good news. Finally we can escape philosophising – and exchanging untestable intuitions on whether the intrinsic nature of the physical could or couldn’t be experiential – and instead extract novel, precise, experimentally falsifiable predictions. And they really are empirical predictions, not just retrodictions: on the face of it, the structural mismatch between our phenomenally bound minds and the microstructure of the CNS is unbridgeable, just as David Chalmers recognises. Phenomenal binding is classically impossible. Yet if non-materialist physicalism is true, then a perfect structural match must exist between our phenomenally bound minds and (ultimately) the formalism of QFT. Anyone who appreciates the raw power of decoherence (cf. https://arxiv.org/pdf/1404.2635.pdf) in the “warm, wet and noisy” CNS can tell you that an interferometry experiment like https://www.physicalism.com/#6 could at most yield the nonclassical interference signature of functionless "noise". If I’m talking the proposal up (which alas makes one sound more like a crank rather than a disinterested truth-seeker), it’s not because I'm confident the answer is correct, but rather because I worry that the interferometry experiment needed to test it will otherwise never get done.

Artir 2017-04-15T19:48:51Z

Hi David,

I will probably write a second post addressing your proposal (and panpsychism and non-neutral monism). In principle yours seems to make more sense than the others, however it it is difficult to understand what you are exactly saying. Brian Tomasik and others also expressed similar thoughts. I hope a closer look of your published material will help me. To be fair, I find that many people are unclear when they talk about consciousness, and I include Dennett here (But not Chalmers, he is quite easy to understand and does not try to hide the implications of what he is saying).

Out of all of the proposals I've seen, only yours and Penrose's Orch-OR at least propose an experiment to test them (as far as I know). I hope those experiments are done soon.

Artir

Simon 2017-04-08T04:01:19Z

Hello, good post.

I'm pretty sure that what you call "identity theory" (in which you appear to include computational functionalism) is correct. However, I don't think I quite agree with the description you give of this position (or with the description given by Sean Carroll, although he's very close).

a) The only one of your five theses that I deny is (5). I think that with sufficient understanding, consciousness will be described using algorithmic language that will describe the behavior of neurons firing (in a human brain), in much the same way that today we can describe a character interacting with a 3D world in a video game using algorithmic language that describes the behavior of electrons moving in transistors. The only reason that this seems impossible to us is that we are one or many major insights away from the required understanding.

b) I don't think "poetic" is the right word to describe the relationship between the concept of 'consciousness' and the neuronal activity in a human brain. Is it "poetic" to call a car "a car" instead of talking about the way that the engine interacts with the steering and transmission systems, and so on? No, it's the correct English word to refer to a long list of specific components assembled in a specific way, capable of specific behavior.

c) Likewise, if something is "merely real" (as opposed to really real) if it's made of parts, that means that everything that exists except fundamental particles (and, if you're right, consciousness) is merely real. Water molecules, bacteria, human bodies, human societies, stars, and the universe as a whole are all "merely real". Doesn't it seem strange that you've just declared yourself an eliminativist about 99.99%+ of the concepts you think and talk about every day?

So as a computational functionalist I would say that:

-

Consciousness is really real, and like everything else it's made of parts.

-

We're not p-zombies because p-zombies are not possible, although a human mind can conceive of them, in the same way that someone who doesn't understand cars can conceive of a car moving without an engine. However...

-

It might be possible to assemble a machine that outwardly looks like us and behaves like us and yet isn't conscious, but if we were to take a good look at its 'brain' (or whatever material mechanism makes it act and react), we would see that it doesn't work like ours at all. Therefore, the Chinese room isn't conscious if it's just implementing a bunch of "if input X, output Y" rules, but a replica of a human brain implemented by something that's not neurons would be conscious, whether that something is transistors moving electrons around or people passing messages around.

-

Mary does learn something new when she sees red, kinda, using a somewhat loose definition of "learn": Her neurons will fire in a way that they've never fired before, and shortly after the neurons that store her memories will be configured in a new way to hold a new memory.

-

All the theories of mind that state that consciousness is not made of parts amount to taking a mysterious concept, putting it in a box, labeling this box "fundamental", and declaring the problem solved. This is not good philosophy.

-

I'm not sure what to think about eliminativists. I've read some things by Dennett that make me think he'd agree with everything I've written here except perhaps point (4) about Mary's room, but if Tomasik really thinks we're p-zombies I'm at a loss.

-

Joe 2017-05-12T05:13:44Z

I disagree with your 3). Note that we do not currently know what the human brain's 'consciousness algorithm' looks like, yet we are quite sure that whatever it turns out to be will not invalidate our claim to being conscious. Therefore whatever your alternative algorithm that perfectly mimics consciousness without actually being conscious looks like, it could have been our implementation to begin with. These ideas are conflict: to insist that a perfect consciousness imitator is nonetheless not truly conscious, we would have to admit that whether we are conscious ourselves depends on the algorithm our minds use, and that if it turns out to match that of our hypothetical unconscious imitator then we must not be conscious.

The solution is to realise that 'conscious' is a word describing the behaviours we think of as conscious, not a specific underlying implementation (since we call ourselves conscious without knowing what our implementation is), so by definition any consciousness imitator is truly conscious. However, this doesn't mean we are guaranteed to be able to easily and effectively check for consciousness -- we have to think of tests that capture everything we mean by the word. And of course a consciousness imitator might only become conscious when it's trying to persuade us that it is, returning to unconsciousness the rest of the time.

Simon 2017-04-08T21:53:10Z

Tomasik definitely isn't saying what you think he's saying. The paragraph immediately after your final quote of him is this:

"Note that rejecting the zombic hunch does not cast doubt on the certainty of your being conscious. The only dispute is about whether zombies are logically consistent, i.e., whether we should conceive of consciousness in non-analytic terms. This is a technical question on which your certitude of being conscious seems to have little to say."

Brian Tomasik 2017-04-08T22:25:29Z

Cool post. :)

> the idea that consciousness is really a poetic name for certain patterns of brain activity. This is the identity theory.

Relative to my (fallible) understanding, I would still call this a type-A theory of consciousness (and you go on to agree that it's just a less blunt way to explain eliminativism). I understand identity theories as type-B views, which hold that consciousness is really real and happens to be identical (whatever that means) to certain physical processes. (Of course, I don't think this makes sense, and I see identity theories as disguised forms of property dualism.)

> You can have bridges built of wood or steel, but you need bridges to get external phenomena into your awareness. Recall, the reason you are doubting realism and embracing eliminativism is that at some point, some facts entered your awareness and you pondered them.

If zombies are possible, then zombies get facts and ideas into their brains without consciousness. Zombies can make statements about their being conscious, can know things about the world, etc.

Comments